We have a screener we use when hiring accountants at Eight One Partners. It's not a huge thing, qualified candidates should have it done in less than 15 minutes as it only includes some very basic accounting. The problem is that it is a spreadsheet and it gets tedious going through spreadsheets. The questions are also somewhat open ended so we can evaluate judgement, but that makes it even harder to compare the spreadsheet we received with the answer key.

Maybe ChatGPT could help? We spent several hours trying to get ChatGPT to accurately display the differences between two spreadsheets. We could not get it to work right 100% of the time.

If you're an engineer, you're probably wondering "Why not just write a script? Comparing spreadsheets is easy" and you would be right. I ended up writing a script. It took me all of 2 hours. That includes a reasonable GUI interface for our staff. A lot of time would have been saved if we had never tried ChatGPT in the first place.

This experience reminded me of the saying "when you have a hammer, everything looks like a nail." Even someone as skeptical of the AI hype as me fell for that fallacy. I can only imagine what folks who believe in the hype are doing.

Why are we all treating AI as a hammer? I think it is because we have muddied the waters around what counts as AI, and specifically what counts as AGI (hint: AGI has nothing to do with profits). AI has been around in some form for decades. Google search is AI. Image recognition software is AI. Recommendation engines are AI. All of these are specialized in one use case however. They do one thing and one thing well. They don't have dreams. They don't create their own goals. They're not sentient.

AI in the movies has always been more. Skynet in Terminator. Androids in Blade Runner. The Matrix. The AI in movies has always been this fantastical thing that could think and act like humans, but better. It has created a different impression for most people of what AI is than what AI has actually been for decades.

The AI that we have been using is machine learning. It is mostly just pattern matching. It may be extremely advanced and fancy pattern matching, but pattern matching nonetheless. It does not actually understand concepts, it can only recognize if something matches a particular pattern or not. I'd argue that the hallucinations everyone is talking about is not a bug that can be fixed. It is an attribute of the pattern matching approach.

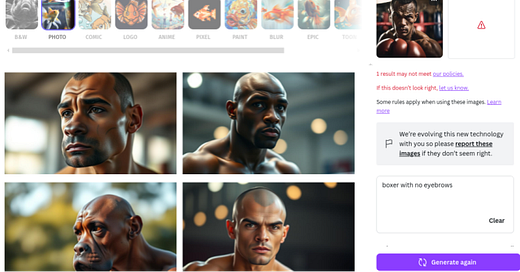

Side note: I tried to get AI to generate an image of a boxer with no eyebrows for one of my previous posts. It was not able to because it does not actually know what a boxer or an eyebrow is. It only knows that images that look like the millions of images of boxers in the training data have the label "boxer".

The AI we see in movies is AGI. That is the big one. The singularity. The thing that may want to kill all humans. It's also the form of AI that is a lot harder to build. We barely know how the human brain works. Building AGI would be simulating something where we don't fully understand the basic fundamentals. The best analogy I can think of would be building rockets without understanding gravity.

What we have today isn't close to AGI. LLMs like ChatGPT and Gemini are not AGI. They are amazing tools and the next evolution of machine learning, but they run under the same principles of fancy pattern matching. They just happen to be so good at pattern matching that some of their output looks like human intelligence on the surface.

Machine learning has been around for decades though. Better machine learning is not exciting. It does not get people hyped up. It does not get people to open up their wallets. It does not get investors to open up their wallets.

Skynet is exciting. The singularity is exciting. The fear of being replaced may be terrifying, but terror turns to FOMO and FOMO creates excitement. What's the easiest way to make people excited about LLMs? Change the definition of AGI. If you change the definition of AGI, people will autmatically associate what you've built with the AI in movies. It doesn't matter that what you've built isn't even close. There's a lot of money to be made by changing the definition of AGI.

That's ultimately why I think everyone defaults to using an LLM even when other tools are much better for the job. The definitions around AI have been muddied that people have associated LLMs with the AI we're familiar with from movies. Our reflex is to act as if ChatGPT can do anything. It is the hammer we're excited to use.