The Problem With The Term "10x Developer"

Measuring developer productivity is not universal across all software development

The term "10x developer" has been thrown a lot around over the years. I've been asked if I thought I was one more times than I can count. Usually it was by someone who wasn't a developer and did not really know how to evaluate developer productivity.

I don't disagree with the concept that some developers are more productive than others. My issue is that not all software development is the same and productivity has to be measured differently depending on the context.

Consider the origin of the term. There was a paper written in the 1960s that first talked about how some programmers were an order of magnitude more productive than others. Being written in the 1960s results in two glaring problems that make it less applicable today.

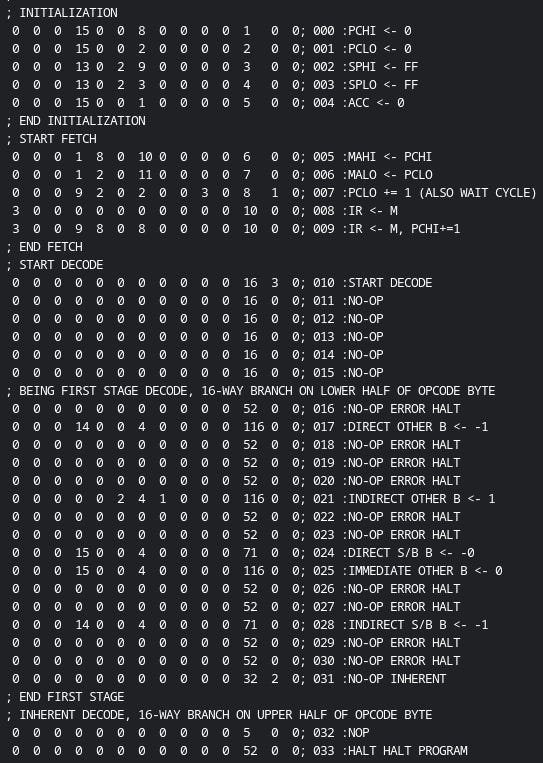

The first is that programming was very VERY different back then. I had a college project (not in the 1960s) to implement functions for an assembly language. Not writing code in assembly, implementing assembly. Every line of code was a fixed set of bits. There was no program counter (that thing that executes one line of code after another), so every line had to specify which line of code was next. We only had 9 bits allocated for that GOTO, which capped the code at 512 lines. Couldn't figure out how to get everything implemented in 512 lines? Tough.

The code is in base 10 for readability, but it is all binary in reality. I spent two hours fixing an issue where all I needed to do was reverse two bits. If I had been able to see the problem bits in 5 minutes, that would have been a 24x developer move.

Assembly isn't that much easier to work with. Higher level languages like Fortran existed, but assembly was used because of the memory constraints that existed back then. The navigation and targeting systems for a fighter jet in the late 1970s had to fit into 64 kilobytes of memory. A "hello world" program in Java uses 2-3 orders of magnitude more than that. Spending two days trying to cut memory usage down by a few bytes back then was a necessary endeavor. Spending two days to cut that amount of memory usage today could get you fired.

In a world with these constraints, a 10x developer could write more code not because they could type faster, but because they would make fewer mistakes and be able to fix their mistakes faster.

That leads to the second problem: preventing mistakes was a lot different back then. Small mistakes in the code would result in hours, possibly days, of debugging. Today, programmers do plenty of stuff like reverse booleans by accident. Those problems are usually solved in less than a minute. The impactful mistakes of today tend to be structural. What programming language is being used? Which framework, if there is going to be one? What database do we use? What schema do we need? Should we go with AWS, Google Cloud, or operate a data center? We have a lot more capabilities today. That gives us more options, including terrible ones.

That's not to say that code quality doesn't matter. It does. Programming just happens to be easier today thanks to higher level languages and the better hardware that lets us use them. That has lowered the barrier to entry to making software which has expanded where software is being used. Software used to require a heavy amount of training in order to use it. Today, any consumer application that requires a manual will fail. We have to worry a lot more about UX now. No one other than a programmer wants to deal with a terminal.

UX is a tricky thing. No amount of prep can get you a solid answer until working software is in the hands of users. That means that code needs to be structured in a way that allows us to apply feedback quickly. The performance optimizations used decades ago create a rigidness in the code that makes it harder to apply that feedback. An essential activity in systems that require those performance optimizations has become a liability in most modern software applications.

Unfortunately, the culture and perception around software development has not adapted to what is required for software applications today. A perfect example is the stubborness around the continued use of leetcode interviews. That entire interiew process is based on the assumption that developer productivity today is measured the exact same as it was 50 years ago. That assumption may be true in some situations, such as operating systems, search algorithms, or developing LLM models. The percentage of developers working in those situations is fairly small compared to the number of developers working on CRUD apps or apps that use LLMs where that assumption is as far from true as we can get.

That nuance does not exist in the term "10x developer". It's mentally easier to assume there is a universal measure for developer productivity and so most people will go with that assumption. The result is that companies develop interview processes, performance review processes, and SDLCs that are unfit for their actual needs.